Azure Functions

All things Azure Functions

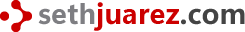

Fixing Mixed-Content and CORS issues at ML Model inference time with Azure Functions

9/10/2020

This is a follow-up from the previous post on deploying an ONNX model using Azure Functions. Suppose you've got the API working great and youwant to include this amazing functionality on a brand new w...

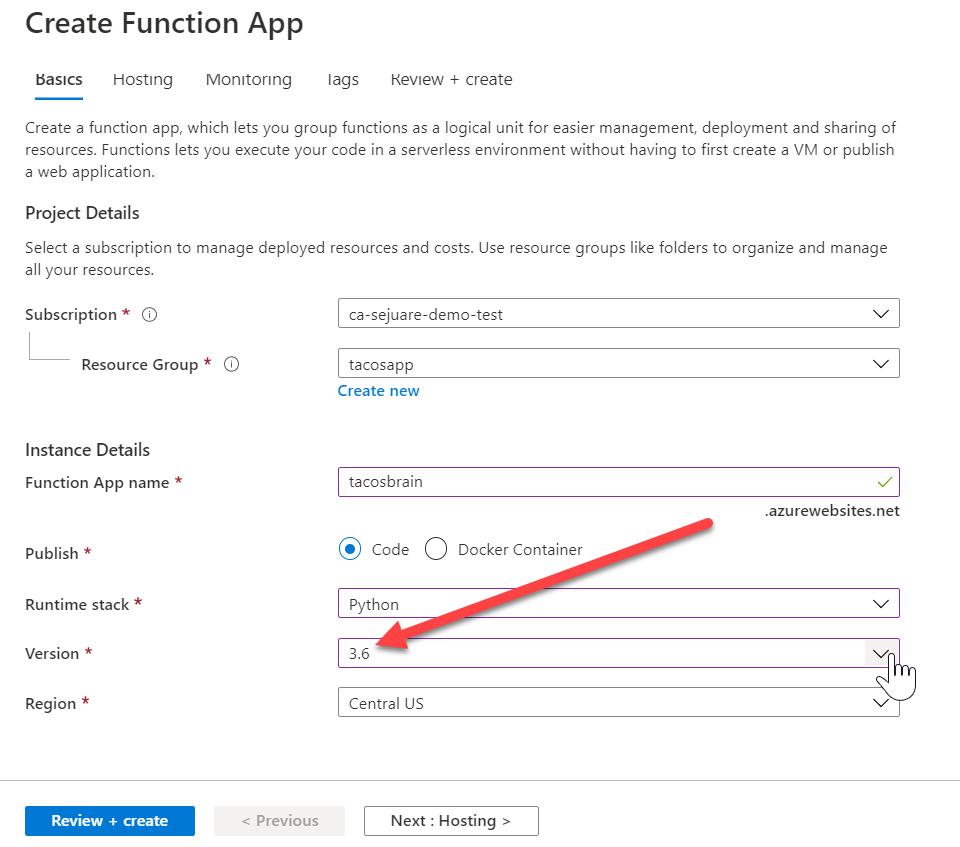

Troubleshooting an ONNX Model deployment to Azure Functions

8/20/2020

Building awesome machine learning models can be an onerous task. Once all the blood, sweat and tears have been expended creating this magical (and ethical) model sometimes it feels like getting the th...